-

Michael Wood

-

23 February 2023

ChatGPT: Proceed with Caution

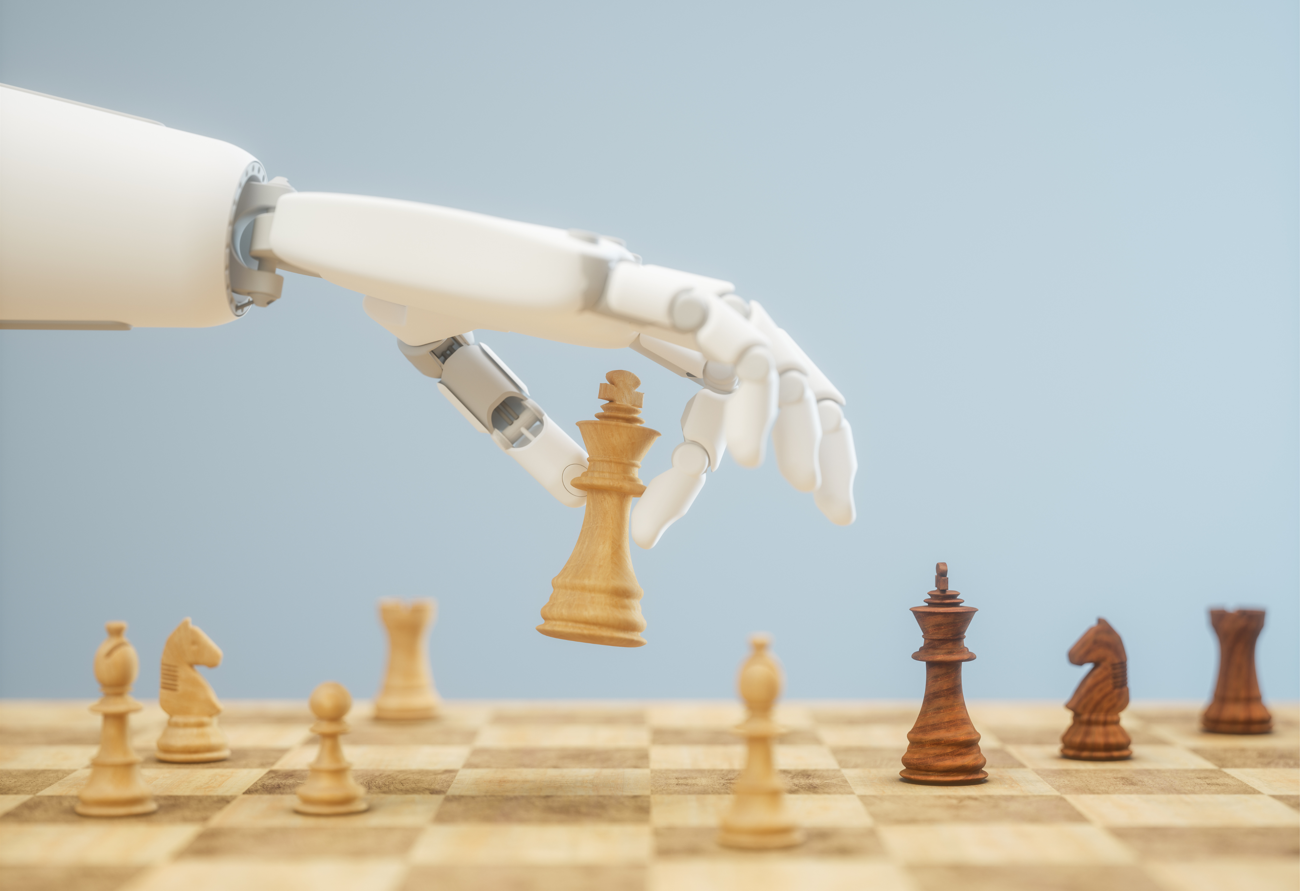

ChatGPT is undoubtedly an incredibly impressive tool. Artificial intelligence has been considered in some form for close to 100 years. In 1935, Alan Turing suggested what computers could achieve and later explained how that could be applied to chess. Specifically, AI is not about brute force; it’s about absorbing new data to train various models. In Chess, there is always a finite number of possibilities for a player's move; however, to determine the best move, you should consider every possible opponent move and then every response you would take, and so on, resulting in hugely complex calculations. In fact, there are more variations of chess games than there are atoms in the observable universe! Rather than attempt to choose the best move based on brute force alone, AI involves building and training a model and then providing as much data as possible, i.e., every chess game ever played. Instead of performing a hugely complex calculation, AI would have learnt the best move based on the training it received.

Back to ChatGPT, what is so incredibly impressive to me involves two things. Firstly, the scale of the models and training involved - the tool seemingly can answer any question, meaning the amount of data it must have absorbed is staggering. The second point is the ability to construct an answer to the question based on some other factor, such as in the style of a different person - to answer a question, compose a song or write a story is already complicated, but to refactor the response based on some other subject matter is something I’ve never even considered before.

ChatGPT and AI, specifically, will always trigger cause for concern and rightly so. My biggest fear for AI is it leads to a world where people’s views and opinions slowly become one. ChatGPT is only as smart as the data that was fed into it and the interactions it has with users. If the data was (even slightly) skewed in a particular direction, and that data is used to provide an answer to a user’s question, that user could then go on to produce further data that only serves to reinforce AI’s original decision positively – this can create a dangerous cyclical process that skews the data even further. Consider a simple set of data where 75 apples are sold, and 25 bananas are sold. You ask AI what your focus should be for the next sales period, and it suggests apples because it knows they performed well last period. Of course, more apples are then sold, and the AI then believes it made the correct choice, whereas a human would quite obviously point out it was due to a bigger focus on apples, and it’s actually a far trickier assessment to make. Suggesting that the data used to train AI can be skewed by mistake is worrying. Still, the bigger fear is that bad actors intentionally cause AI to favour particular opinions/answers.

The final point I want to make on AI is that while it will inevitably hold more data than any single person, I don’t believe it should ever be used as a single source of truth over what a single person is capable of producing. It will be a valuable tool for something you know little about, and there will be specific scenarios that AI will thrive under, but it shouldn’t be the only place where you source information. When I was first introduced to ChatGPT by a colleague, I asked it, rather jokingly, to write a specific plugin for Dynamics 365 and was amazed that it actually succeeded. While the code would have worked, it did not consider any other crucial factors, such as code reusability. Impressive and useful, but not good enough.

In terms of the use of AI, as we advance, I think a huge amount of care is needed in both design and consumption. The design and data ingested need to be as wide-ranging as possible while balancing careful moderation of particularly harmful/offensive content. Care around the consumption of AI means ensuring users understand that AI is not infallible. In my view, the power of the human brain is comparable to the hardness of a diamond – it’s impossible to use a diamond to create a harder material, and I think it’s equally impossible for the human brain to create something more powerful than itself.